The Dirty Little Secret About Mobile Benchmarks

September 29, 2012 30 Comments

This article has had almost 30,000 views. Thanks for reading it.

When I wrote this article over a year ago, most people believed mobile benchmarks were a strong indicator of device performance. Since then a lot has happened: Both Samsung and Intel were caught cheating and some of the most popular benchmarks are no longer used by leading bloggers because they are too easy to game. By now almost every mobile OEM has figured out how to “game” popular benchmarks including 3DMark, AnTuTu, Vellamo 2 and others. Details. The iPhone hasn’t been called out yet, but Apple has been caught cheating on benchmarks before, so there is a high probability they are employing one or more of the techniques described below like driver tricks. Although Samsung and the Galaxy Note 3 have received a bad rap over this, the actual impact on their benchmark results was fairly small, because none of the GPU frequency optimizations that helped the Exynos 5410 scores exist on Snapdragon processors. Even when it comes to the Samsung CPU cheats, this time around the performance deltas were only 0-5%.

11/26/13 Update: 3DMark just delisted mobile devices with suspicious benchmark scores. More info.

2/1/17 Update: XDA just accused Chinese phone manufacturers of cheating on benchmarks. You can read the full article here.

Mobile benchmarks are supposed to make it easier to compare smartphones and tablets. In theory, the higher the score, the better the performance. You might have heard the iPhone 5 beats the Samsung Galaxy S III in some benchmarks. That’s true. It’s also true the Galaxy S III beats the iPhone 5 in other benchmarks, but what does this really mean? And more importantly, can benchmarks really tell us which phone is better than another?

Why Mobile Benchmarks Are Almost Meaningless

- Benchmarks can easily be gamed – Manufacturers want the highest possible benchmark scores and are willing to cheat to get them. Sometimes this is done by optimizing code so it favors a certain benchmark. In this case, the optimization results in a higher benchmark score, but has no impact on real-world performance. Other times, manufacturers cheat by tweaking drivers to ignore certain things, lower the quality to improve performance or offload processing to other areas. The bottom line is that almost all benchmarks can be gamed. Computer graphics card makers found this out a long time ago and there are many well-documented accounts of Nvidia, AMD and Intel cheating to improve their scores.Here’s an example of this type of cheating: Samsung created a white list for Exynos 5-based Galaxy S4 phones which allow some of the most popular benchmarking apps to shift into a high-performance mode not available to most applications. These apps run the GPU at 532MHz, while other apps cannot exceed 480MHz. This cheat was confirmed by AnandTech, who is the most respected name in both PC and mobile benchmarking. Samsung claims “the maximum GPU frequency is lowered to 480MHz for certain gaming apps that may cause an overload, when they are used for a prolonged period of time in full-screen mode,” but it doesn’t make sense that S Browser, Gallery, Camera and the Video Player apps can all run with the GPU wide open, but that all games are forced to run at a much lower speed.Samsung isn’t the only manufacturer accused of cheating. Back in June Intel shouted at the top of their lungs about the results of an ABI Research report that claimed their Atom processor outperformed ARM chips by Nvidia, Qualcomm and Samsung. This raised quite a few eyebrows and further research showed the Intel processor was not completely executing all of the instructions. After released an updated version of the benchmark, Intel’s scores dropped overnight by 20% to 50%. Was this really cheating? You can decide for yourself — but it’s hard to believe Intel didn’t know their chip was bypassing large portions of the tests AnTuTu was running. It’s also possible to fake benchmark scores as in this example.Intel has even gone so far as to create their own suite of benchmarks that they admit favor Intel processors. You won’t find the word “Intel” anywhere on the BenchmarkXPRT website, but if you check the small print on some Intel websites you’ll find they admit “Intel is a sponsor and member of the BenchmarkXPRT Development Community, and was the major developer of the XPRT family of benchmarks.” Intel also says “Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors.” Bottom line: Intel made these benchmarks to make Intel processors look good and others look bad.

- Benchmarks measure performance without considering power consumption – Benchmarks were first created for desktop PCs. These PC were always plugged into the wall, had multiple fans and large heat-sinks to dissipate the massive amounts of power they consumed. The mobile world couldn’t be more different. Your phone is rarely plugged into the wall — even when you are gaming. Your mobile device is also very limited on the amount of heat it can dissipate and battery life drops as heat increases. It doesn’t matter if your mobile device is capable of incredible benchmark scores if your battery dies in only an hour or two. Mobile benchmarks don’t factor in the power needed to achieve a certain level of performance. That’s a huge oversight, because the best chip manufacturers spend incredible amounts of time optimizing power usage. Even though one processor might slightly underperform another in a benchmark, it could be far superior, because it consumed half the power of the other chip. You’d have no way to know this without expensive hardware capable of performing this type of measurements.

- Benchmarks rarely predict real-world performance — Many benchmarks favor graphics performance and have little bearing on the things real consumers do with their phones. For example, no one watches hundreds of polygons draw on their screens, but that’s exactly the types of things benchmarks do. Even mobile gamers are unlikely to see increased performance on devices which score higher, because most popular games don’t stress the CPU and GPU the same way benchmarks do. Benchmarks like GLBenchmark 2.5 focus on things like high-level 3D animations. One reviewer recently said, “Apple’s A6 has an edge in polygon performance and that may be important for ultra-high resolution games, but I have yet to see many of those. Most games that I’ve tried on both platforms run in lower resolution with an up-scaling.” For more on this topic, scroll down to the section titled: “Case Study 2: Is the iPhone 5 Really Twice as Fast?”This video proves shows that the iPhone 5s is only slightly faster than the iPhone 5 when it comes to real-world tests. For example, The iPhone 5s only starts up only 1 second faster than the iPhone 5 (23 seconds vs. 24 seconds). The iPhone 5s only loads the Reddit.com site 0.1 seconds faster than the iPhone 5. These differences are so small it’s unlikely anyone would even notice them. Would you believe the iPhone 4 shuts down five times faster than the iPhone 5s? It’s true (4 seconds vs. 21.6 seconds). Another video shows that even though the iPhone 5s does better on most graphics benchmarks, when it comes to real world things like scrolling a webpage in the Chrome browser, Android devices scroll significantly faster than a iPhone 5s running iOS 7.See for yourself in this video.

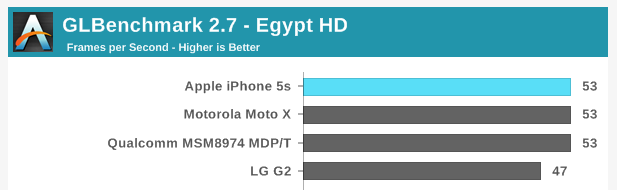

The iPhone 5s appears to do well on graphics benchmarks until you realize that Android phones have almost 3x the pixels

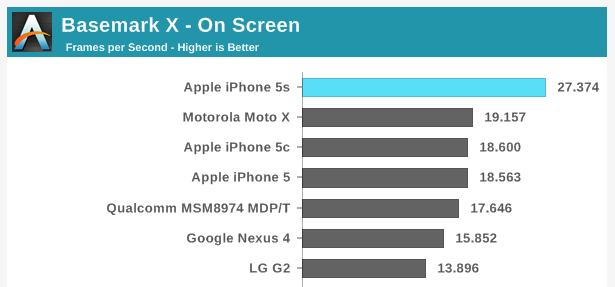

- Some benchmarks penalize devices with more pixels — Most graphic benchmarks measure performance in terms of frames per second. GFXBench (formerly GLBenchmark) is the most popular graphics benchmark. Apple has dominated in the scores of this benchmark for one simple reason. Apple’s iPhone 4, 4S, 5 and 5s displays all have a fraction of the pixels flagship Android devices have. For example, in the chart above, the iPhone 5s gets a score of 53 fps, while the LG G2 gets a score of 47 fps. Most people would be impressed by the fact that the iPhone 5s got a score that was 12.7% higher than the LG G2, but when you consider the fact the LG G2 is pushing almost 3x the pixels (2073600 pixels vs. 727040 pixels), it’s clear the Adreno 330 GPU in the LG G2 is actually killing the GPU in the iPhone 5s. The GFXBench scores on the 720p Moto X (shown above) are further proof that what I am saying is true. This bias against devices with more pixels isn’t just true with GFXBench, you can see the same behavior with graphics benchmarks like Basemark X shown below (where the Moto X beats the Nexus 4).

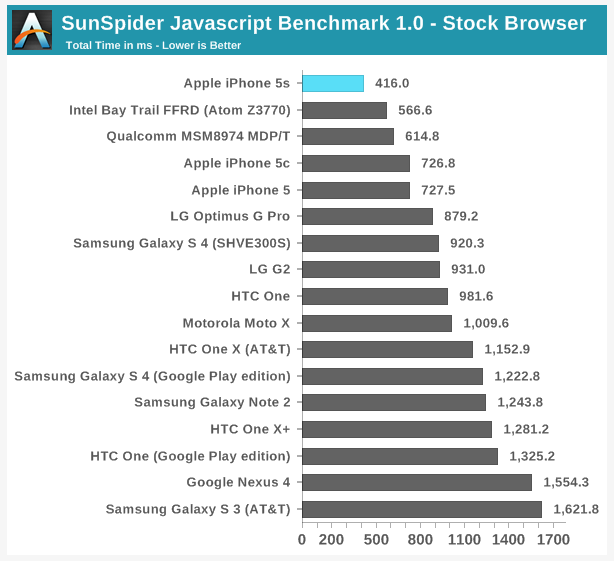

- Some popular benchmarks are no longer relevant — SunSpider is a popular JavaScript benchmark that was designed to compare different browsers. However, according to at least one expert, the data that SunSpider uses is a small enough benchmark that it’s become more of a cache test. That’s one reason why Google came out with their V8 and Octane benchmark suites, both are better JavaScript tests than SunSpider.” According to Google, Octane is based upon a set of well-known web applications and libraries. This means, “a high score in the new benchmark directly translates to better and smoother performance in similar web applications.” Even though it may no longer be relevant as an indicator of Java-script browsing performance, SunSpider is still quoted by many bloggers. SunSpider isn’t the only popular benchmark with issues, this blogger says BrowserMark also has problems.

SunSpider is a good example of a benchmark which may no longer be relevant — yet people continue to use it

- Benchmark scores are not always repeatable – In theory, you should be able to run the same benchmark on the same phone and get the same results over and over, but this doesn’t always occur. If you run a benchmark immediately after a reboot and then run the same benchmark during heavy use, you’ll get different results. Even if you reboot every time before you benchmark, you’ll still get different scores due to memory allocation, caching, memory fragmentation, OS house-keeping and other factors like throttling.Another reason you’ll get different scores on devices running exactly the same mobile processors and operating system is because different devices have different apps running in the background. For example, Nexus devices have far less apps running in the background than a non-Nexus carrier-issued devices. Even after you close all running apps, there are still apps running in the background that you can’t see — yet these apps are consuming system resources and can have an affect on benchmark scores. Some apps run automatically to perform housekeeping for a short period and then close. The number and types of apps vary greatly from phone to phone and platform to platform, so this makes objective testing of one phone against another difficult.

Benchmark scores sometimes change after you upgrade a device to a new operating system. This makes it difficult to compare two devices running different versions of the same OS. For example, the Samsung Galaxy S III running Android 4.0 gets a Geekbench score of 1560, which the same exact phone running Android 4.1 gets Geekbench score of 1781. That’s a 14% increase. The Android 4.4 OS causes many benchmark scores to increase, but not in all cases. For example, after moving to Android 4.4, Vellamo 2 scores drop significantly on some devices because it can’t make use of some aspects of hardware acceleration due to Google’s changes.

Benchmark scores sometimes change after you upgrade a device to a new operating system. This makes it difficult to compare two devices running different versions of the same OS. For example, the Samsung Galaxy S III running Android 4.0 gets a Geekbench score of 1560, which the same exact phone running Android 4.1 gets Geekbench score of 1781. That’s a 14% increase. The Android 4.4 OS causes many benchmark scores to increase, but not in all cases. For example, after moving to Android 4.4, Vellamo 2 scores drop significantly on some devices because it can’t make use of some aspects of hardware acceleration due to Google’s changes.

Perhaps the biggest reason benchmark scores change over time is because they stress the processor increasing its temperature. When the processor temperature reaches a certain level, the device starts to throttle or reduce power. This is one of the reasons scores on benchmarks like AnTuTu change when they are run consecutive times. Other benchmarks have the same problem. In this video, the person testing several phones gets a Quadrant Standard score on the Nexus 4 that is 4569 on the first run and 4826 on a second run (skip to 14:25 to view).

- Not all mobile benchmarks are cross-platform — Many mobile benchmarks are Android-only and can’t help you to compare an Android phone to the iPhone 5. Here are just a few popular mobile benchmarks which are not available for iOS and other mobile platforms (e.g. AnTuTu Benchmark, Octane, Neocore, NenaMark, Quadrant Standard and Vellamo).

- Some benchmarks are not yet 64-bit — Android 5.0 supports 64-bit apps, but most benchmarks do not run in 64-bit mode yet. There are a few exceptions to this rule. A few Java-based benchmarks (Linpack, Quadrant) run in 64-bit mode and do see performance benefits on systems with 64-bit OS and processors. AnTuTu also supports 64-bit.

- Mobile benchmarks are not time-tested — Most mobile benchmarks are relatively new and not as mature as the benchmarks which are used to test Macs and PCs. The best computer benchmarks are real world, relevant and produce repeatable scores. There is some encouraging news in this area however — now that 3DMark is available for mobile devices. It would be nice if someone ported other time-tested benchmarks like SPECint to iOS as well.

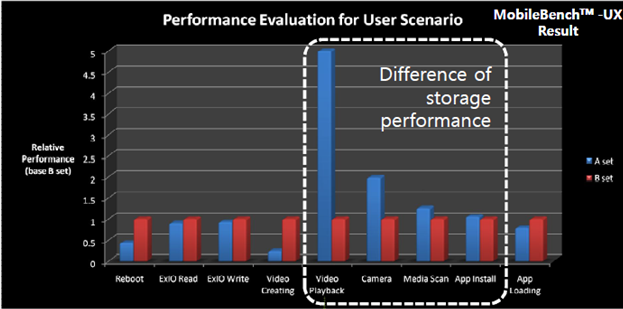

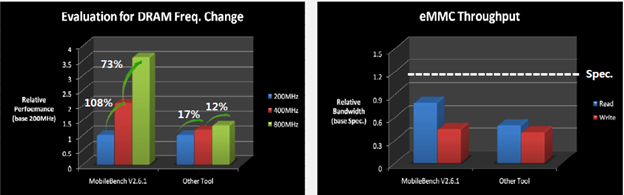

- Inaccurate measurement of memory and storage performance — There is evidence that existing mobile benchmarks do not accurate measure the impact of faster memory speeds or storage performance. Examples above and below. MobileBench is supposed to address this issue, but it would be better if there was a reliable benchmark that was not partially created memory suppliers like Samsung.

- Inaccurate measurement of the heterogenous nature of mobile devices — Only 15% of a mobile processor is the CPU. Modern mobile processors also have DSPs, image processing cores, sensor cores, audio and video decoding cores, and more, but not one of today’s mobile benchmarks can measure any of this. This is a big problem.

Case Study 1: Is the New iPad Air Really 2-5x as Fast As Other iPads?

There have been a lot of articles lately about the benchmark performance of the new iPad Air. The writers of these article truly believe that the iPad Air is dramatically faster than any other iPad, but most real world tests don’t show this to be true. This video compares 5 generations of iPads.

Results of side-by-side video comparisons between the iPad Air and other iPads:

- Test 1 – Start Up – iPad Air started up 5.73 seconds faster than the iPad 1. That’s 23% faster, yet the Geekbench 3 benchmark suggests the iPad Air should be over 500% faster than an iPad 2. I would expect the iPad Air would be more than 23% faster than a product that came out 3 years and 6 months ago. Wouldn’t you?

- Test 2 – Page load times – The narrator claims the iPad Air’s new MIMO antennas are part of the reason the new iPad Air loads webpages so much faster. First off, MIMO antennas are not new in mobile devices; They were in the Kindle HD two generations ago. Second, apparently Apple’s MIMO implementation isn’t effective, because if you freeze frame the video just before 1:00, you’ll see the iPad 4 clearly loads all of the text on the page before the iPad Air. All of the images on the webpage load on the iPad 4 and the iPad Air at exactly the same time – even though browser-based benchmarks suggest the iPad Air should load web pages much faster.

- Test 3 – Video Playback – On the video playback test, the iPad Air was no more than 15.3% faster than the iPad 4 (3.65s vs. 4.31s)

Reality: Although most benchmarks suggest the iPad Air should be 2-5x faster than older iPads, at best, the iPad Air is only 15-25% faster than the iPad 4 in real world usage, and is some cases it is no faster.

Final Thoughts

You should never make a purchasing decision based on benchmarks alone. Most popular benchmarks are flawed because they don’t predict real world performance and they don’t take into consideration power consumption. They measure your mobile device in a way that you never use it: running all-out while it’s plugged into the wall. It doesn’t matter how fast your mobile device can operate if your battery only lasts an hour. For the reason top benchmarking bloggers like AnandTech have stopped using the AnTuTu, BenchmarkPi, Linpack and Quadrant benchmarks, but they still continue to propagate the myth that benchmarks are an indicator of real world performance. They claim they use them because they aren’t subjective, but then them mislead their readers about their often meaningless nature.

Some benchmarks do have their place however. Even though they are far from perfect they can be useful if you understand their limitations. However you shouldn’t read too much into them. They are just one indicator, along with product specs and side-by-side real world comparisons between different mobile devices.

Bloggers should spend more time measuring things that actually matter like start-up and shutdown times, Wi-Fi and mobile network speeds in controlled reproducible environments, game responsiveness, app launch times, browser page load times, task switching times, actual power consumption on standardized tasks, touch-panel response times, camera response times, audio playback quality (S/N, distortion, etc.), video frame rates and other things that are related to the ways you use your device.

Although most of today’s mobile benchmarks are flawed, there is some hope for the future. Broadcom, Huawei, OPPO, Samsung Electronics and Spreadtrum recently announced the formation of MobileBench, a new industry consortium formed to provide more effective hardware and system-level performance assessment of mobile devices. They have a proposal for a new benchmark that is supposed to address some of the issues I’ve highlighted above. You can read more about this here.

A Mobile Benchmark Primer

- If you are wondering which benchmarks are the best, and which should not be used,

- should be of use.

Case Study 2: Is the iPhone 5 Really Twice as Fast?

Note: Although this section was written about the iPhone 5, this section applies equally to the iPhone 5s. Like the iPhone 5, experts say the iPhone 5s is twice as fast in some areas — yet most users will notice little if any differences that are related to hardware alone. The biggest differences are related to changes in iOS 7 and the new registers in the A7.

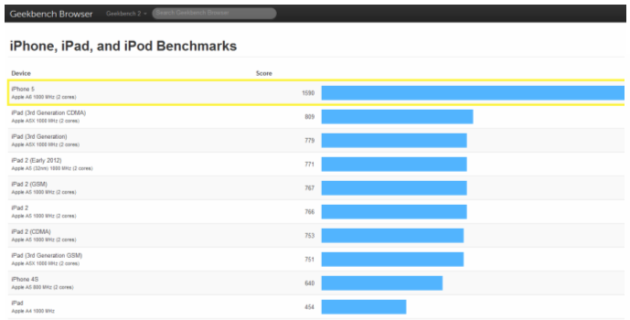

Apple and most tech writers believe the iPhone 5’s A6 processor is twice as fast as the chip in the iPhone 4S. Benchmarks like the one in the above chart support these claims. This video tests these claims.

In tests like this one, the iPhone 4S beats the iPhone 5 when benchmarks suggest it should be twice as slow.

Results of side-by-side comparisons between the iPhone 5 to the iPhone 4S:

- Opening the Facebook app is faster on the iPhone 4S (skip to 7:49 to see this).

- The iPhone 4S also recognizes speech much faster, although the iPhone 5 returns the results to a query faster (skip to 8:43 to see this). In a second test, the iPhone 4S once again beats the iPhone 5 in speech recognition and almost ties it in returning the answer to a math problem (skip to 9:01 to see this).

- App launches times vary, in some cases iPhone 5 wins, in others the iPhone 4S wins.

- The iPhone 4S beats the iPhone 5 easily when SpeedTest is run (skip to 10:32 to see this).

- The iPhone 5 does load web pages and games faster than the iPhone 4S, but it’s no where near twice as fast (skip to 12:56 on the video to see this).

I found a few other comparison videos like this one, which show similar results. As the video says, “Even with games like “Wild Blood” (shown in the video at 5:01) which are optimized for the iPhone 5s screen size, looking closely doesn’t really reveal anything significant in terms of improved detail, highlighting, aliasing or smoother frame-rates.” He goes to say, “the real gains seem to be in the system RAM which does contribute to improved day to day performance of the OS and apps.”

So the bottom line is: Although benchmarks predict the iPhone 5 should be twice as fast as the iPhone 4S, in the real-world tests, the difference between the two is not that large and partially due to the fact that the iPhone 5 has twice as much memory. In some cases, the iPhone 4S is actually faster, because it has less pixels to display on the screen. The same is true for tests of the iPad 4 which reviewers say “performs at least twice as fast as the iPad 3.” However when it comes to actual game play, the same reviewer says, “I couldn’t detect any difference at all. Slices, parries and stabs against the monstrous rivals in Infinity Blade II were fast and responsive on both iPads. Blasting pirates in Galaxy on Fire HD 2 was a pixel-perfect exercise on the two tablets, even at maximum resolution. And zombie brains from The Walking Dead spattered just as well on the iPad 3 as the iPad 4.”

– Rick

Copyright 2012-2014 Rick Schwartz. All rights reserved. This article includes the opinions of the author and does not reflect the views of his employer. Linking to this article is encouraged.

Follow me on Twitter @mostlytech1